OpenAI bots

Virtual agents are currently in open beta. They can be used every month with a limited free trial, and beyond these limits, extra fees will apply. For more information, check the pricing page

OpenAI bots are powered by the GPT-4o engine and the Realtime API technology. They can provide answers to questions, engage in conversations, share information, summarize meetings, send emails, make analyses through interactions, and more. In addition, they are fully capable of understanding their environment and interacting with it. And last but not least, bots show proficiency in multiple languages and can even be used as a translator.

What can the bots do?

The functionalities of the bots can be determined by their function calls. In addition to their large training dataset, bots have access to pieces of information hosted in our interface. With that being said, AI agents are currently able to perform the next tasks:

Have a conversation

Users can interact and speak with the bots as if they were another coworker. Bots can now understand when you talk to them with your own voice, and they even have a voice of their own with which they can answer you.

The Realtime technology of Open AI allows the bots to interact with users by listening to them and speaking with a voice. However, bots with basic GPT 4 technology are still limited to writing communications.

Have a personality

The personalities of bots can be easily configured in our interface while writing the prompt. Hence, you can choose how they will speak, behave and react throughout interactions.

Short-term memory

The bots can remember the last discussion it had with you.

Searching the "members" database

The bots can search the "members" database to **find information about their coworkersùù.

For instance, if asked "Who is John?" the bot will search the "members" database for a coworker named "John". This can be very useful if users fill their profile with information about themselves.

Sending email messages

The bots can send emails to the coworkers registered in the system.

Summarizing meetings and interactions

Bots are capable of summarizing all the information throughout a meeting or an encounter with a user. This summary can perfectly be sent as an email.

Make analyses and evaluations

During a meeting or a conversation, bots can ask questions and analyse the answers of the participants.

Reading the map

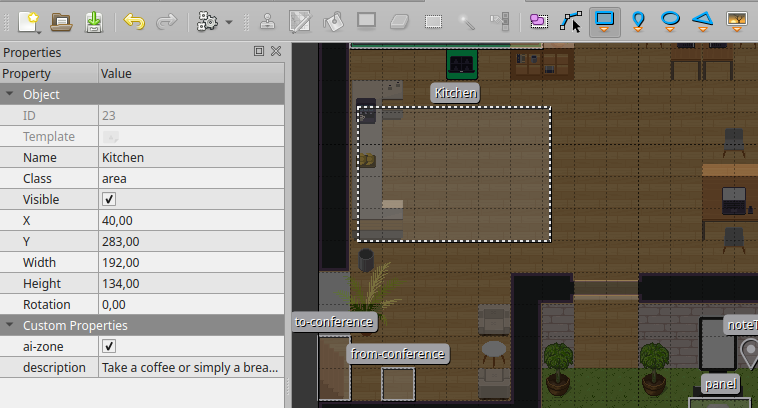

The bot can understand a textual representation of the map. This textual representation should be stored in the description of the area in the map editor. Alternatively, if you are building maps with Tiled, you can store the representation of the map in the Tiled map (the TMJ file).

In order to create such a representation, in Tiled:

- Create a rectangular object on any "object layer" of your map

- The object class must be "area"

- The name of the area must be descriptive. You can call it "Kitchen" or "Living room" for instance.

- You must now add 2 properties to the object:

ai-zone: a boolean property that tells the bot that this area is a zone where it can godescription: a description of the area

Guiding someone on the map

The bot can guide someone to a specific area on the map. In order to guide someone, the bot must know the name of the area where it should guide the user. See the previous section to learn how to create such an area.

Moving around the map and greeting visitors

With the proper prompt and the area configuration, the bots can move around the selected areas. Additionally, they can tell the difference between a coworker and a visitor, that’s why it is totally possible to tell them to go and greet the visitors every time they arrive on the map.

Ending a conversation

When the bot understands the conversation is over, it will walk away.

Saying nothing

One of the most important capabilities of the bot is to stay silent (!). Indeed, when in a conversation with several users, the bot must know when to speak and when to keep quiet. It will try to understand if the sentence is addressed to it and will only respond if it is.

Bots features coming soon

Although the bots are already very smart and can perform numerous tasks, there is a feature that might get implemented in the future:

Retrieval Augmented Generation (RAG)

The RAG system is the key to improving the Large Language Model (LLM) on which the bots are based. By implementing this new mechanism, the AI agents could get access to bigger amounts of information and data, erasing the limitations that allowed bots to be only based on the content that’s already hosted in our interface.